This is a guest post from Alexandra Lamachenka. Alexandra is the Head of Marketing at SplitMetrics, an A/B testing tool trusted by Rovio, MSQRD, Prisma and Zeptolab. She shows mobile development companies how to hack app store optimization by running A/B experiments and analyzing results.

Table of Contents

Increase your app conversion rate with A/B testing

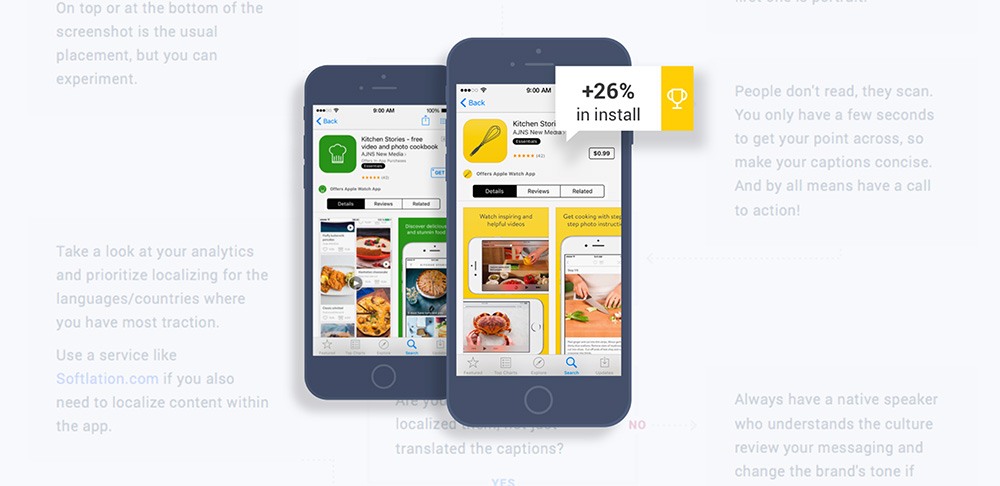

While an average conversion rate (to install) app gets on the App Store is 26%, most applications strive to make their pages convert at least 17% of incoming traffic. A/B testing is a tool that can beat expectations and boost conversion to cosmic 50-60%.

What is A/B Testing?

A/B testing is a method of comparing app page (listing) elements variations and identifying a combination that drives more taps and installs.

To run an A/B experiment on the app page, you determine elements (screenshots, icons, titles, etc.) that require optimization, create two or more page variations and distribute audience equally among them. People will interact differently depending on a landing page they see, and a app conversion rate will differ as well.

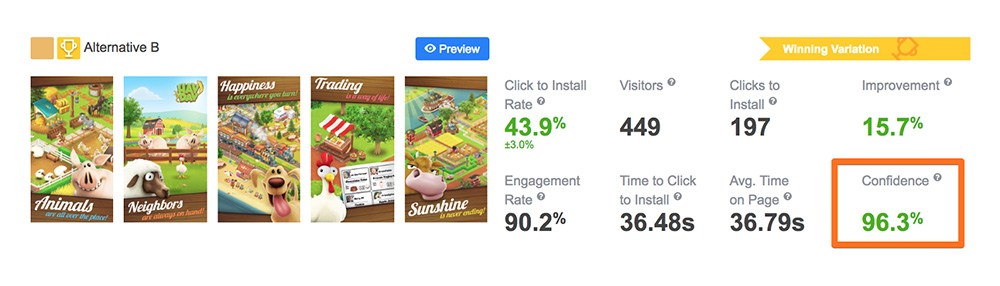

Since you know how potential users react on different elements, you can define and implement a winning variation to grow conversions and organic traffic.

Sounds like a complete game changer: instead of acquiring more users, you double installs with a simple element optimization.

Despite the apparent simplicity, there are some basic A/B testing rules mobile marketers and developers tend to overlook when they just start working with optimization. In this post, we will analyze the reasons behind A/B testing fails and show what to do to make app A/B testing bring significant results.

Most Developers Fail in App A/B Testing. Here’s why:

1. They choose a wrong method of app A/B testing

To be able to predict A/B testing results, you need to conduct a deep research of your audience and competitors and make sure it is free from bias. Ideally, developers should not lean towards options they test.

However, these do not guarantee a positive A/B testing outcome.

Being confident in their hypothesis, developers try to upload changes sequentially and measure results right on the live version of the app page. In most cases, it leads to a drop in conversions. Since it takes time to return a previous version, such forethought experiments ultimately cost installs and money a developer could get.

What to do instead: You do not risk a live version of the page if you run A/B experiments with A/B testing software such as SplitMetrics. It creates web pages emulating the App Store where you can change and edit any element of the page (screenshots, icons, etc.). Then you drive traffic to these pages to see what works and what doesn’t.

2. Their sample size is too small

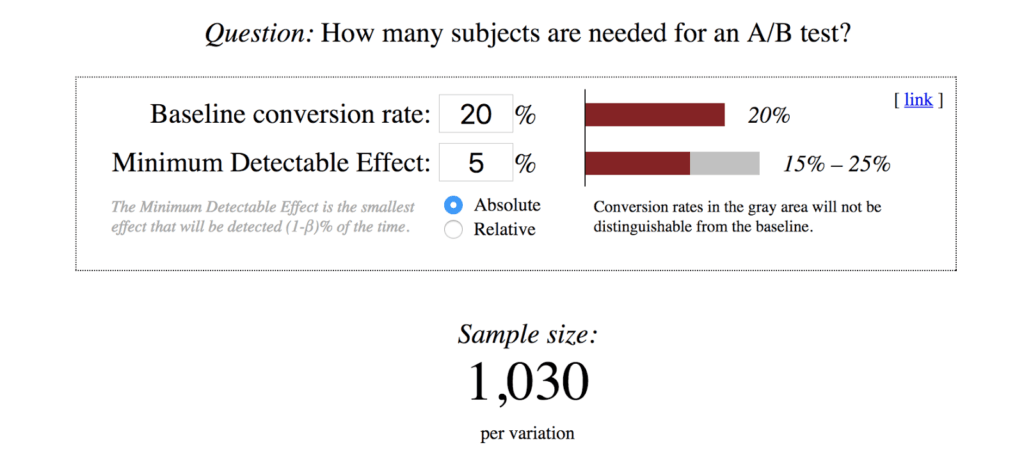

It is hardly possible to make a decision by asking 10 or 100 people only as it leads to a low confidence level. In other words, a small group of potential users cannot represent the whole audience and can mislead you about results from A/B testing.

What to do instead: When it comes to app A/B testing, the larger the sample size you have, the more accurate results from A/B testing you get.

With a limed A/B testing budget, you will want to estimate the sample size you need with a sample size calculator (e.g. Evan’s). This will ensure a group of potential users you are asking is big enough to gain statistically significant results.

3. They run chaotic A/B experiments

What you don’t want to do is to neglect pre-experiment research and analysis. It is common that app developers fail when running chaotic experiments in the hope of finding some random places for improvements.

Although a good research will take some time, chaotic changes steal twice as much time and resources and, in contrast to a research, will not bring desired results.

Even if an developer gets 10% rise in conversions, random test do not allow understanding true reasons behind it.

Why did uses prefer a new icon color or CTA? Perhaps it was dictated by potential users’ hidden habits which you could use for optimizing other elements and grow a conversion rate by extra 30%. With random tests, this cool opportunity eludes you.

What to do instead: Take a closer look at your key user acquisition channels (paid traffic, organic search, e-commerce, etc.) and identify a bottleneck of your user acquisition funnel. Formulate hypothesis you are going to test basing on competitors research and user survey. When developing testing strategies, think about optimizing steps where people bounce and elements that can influence conversions on the exact these steps.

An ASO guide will help you determine optimization techniques for each step.

4. Their A/B Testing result interpretation goes wrong

It is often true when developers get negative or zero-results from A/B experiments. Does it mean mobile A/B testing do not bring any benefits for them? And whether positive results with only a slight difference require instant implementation?

Most developers give up after the first negative experiment, even though their app conversion rate is far away from average 26% and requires optimization.

Contrariwise, they pounce on the optimized option which conversion rate is 0.5-1% higher than a control one and are surprised when a new design has a worse performance in the long-term perspective.

How to interpret A/B testing results correctly: If a hypothesis works worse or shows no difference, A/B testing allows you to see it on time and save money you would spend if changes in the live version of the app page were implemented. Analyze negative results to determine a direction for future experiments.

Positive results can be misleading if you’ve not reached a confidence level or a sample size has been too small. In addition, if an A/B experiment has shown a slight difference, it doesn’t guarantee a conversion boost on the live version of the store listing.

Instead of applying a controversial winner, try to emphasize features of the winning variation and run a follow-up experiment.

5. App developers think of A/B experiments as about one-time action

When thinking about A/B testing, developers do not consider such factors as seasonal buying habits, demographic changes, market changes, product and competitor changes. Yet, all of them can have a significant influence on results at various time points.

Besides, promotions are not limited to event-themed ads on Facebook and posts on social media. App Store Optimization (ASO) also stands for being ahead of competition on the app market taking advantage of all growth opportunities: giveaways, holidays, etc.

Whether Halloween-themed creatives can significantly increase a conversion? What will an effect be from including an information about a holiday discount in a description? You test.

What to do instead: App A/B testing is always an ongoing process during which you keep moving fast and optimize as you go. Since there is always room for optimization, you continue testing even though you are already happy with the results.

Happy A/B testing!

Sharing is caring! Feel free to share this post about the reasons why mobile app or game developers fail in their A/B testing strategy. Thank you!